What Shines

A review of The Temple of the Golden Pavilion.

spoilers ahead

TLDR: Can acts of destruction be virtuous?

The point of this post is to consider how an act of destruction becomes moral. Whether violence is moral is outside the scope. Terrorism as an extension of moral philosophy is outside the scope.

Intro

1950 saw the infamous Kinkaku-Ji destroyed. This had been the second time since some nearly 400 years before, when a civil war broke out, ushering in the warring states period for Japan. Zen monk in training Hayashi Yoken had little documentation regarding his motivation but was said to be a fanatic and a schizophrenic with a persecution complex. The temple, known in English as the Golden Pavilion was reconstructed, packing more gold leaf than before, and remains a popular destination for many to this day. In The Temple of the Golden Pavilion Yukio Mishima re-imagines this boy’s act as that of a troubled adolescent.

Painted image of Kinkaku - ji before it burned down in 1950

Consider the Virtue of Action

The introduction to the book by Nancy Wilson Ross roots psychological trauma as the impetus for Mizoguchi’s troubled relationship with beauty and action. I necessarily agree that an intervention of some kind is preferred when one finds themselves in a sense of “sick consciousness“, but what I reject from Ross is the minimization of Mizoguchi’s reasoning. If Zen is the killing of a cat and the nature of Zen can be the placement of shoes on the head, then Zen seems to be found in the action? Not the reasoning. Mishima thus asks us to consider the effective action Mizoguchi takes when fully disillusioned by the iconography of the temple.

I do not take it that Yukio Mishima imagines Mizoguchi as a psychotic boy lashing out at misplaced impotence or the perceived vanity of the monks. Not to take away from Mizoguchi’s mental troubles, however, I am totally down to say his fixation on the temple is a stand-in for a sense of impotence or a classic psychotic break.

But is Mizoguchi psychotic? Well, potentially. After a fateful encounter with his childhood crush left him speechless and somewhat bumbling, Mizoguhi’s ideology can be clarified by the following:

Day and night I wished for Uiko's death. I wished that the witness of my disgrace would disappear. If only no witnesses remained, my disgrace would be eradicated from the face of the earth. Other people are all witnesses. If no other people exist shame could never be born in the world.

In this formative moment for Mizoguchi his preconception of beauty as imparting a sort of indelible innocence is torn from him, replaced by a deep shame and suspicion. If we are to take the events at face, it would seem his self-consciousness arises from the revelation of his own inaction rather than the imminent rejection of Uiko, who simply happens to be a “witness“ of this shame. I believe the line below is actually unsubtle at intimating the extant of Mizoguchi’s psychological state:

As usual, it occurred to me that words were the only things that could possibly save me from this situation. This was a characteristic misunderstanding on my part. When action was needed, I was always absorbed in words; for words proceeded with such difficulty from my mouth that I was intent on them and forgot all about action. It seemed to me that actions, which are dazzling, varied things, must always be accompanied by equally dazzling and equally varied words

One can ask, under a less pressing situation, in which Mizoguchi does not deal with the suicide of a peer, the death of his father, an inattentive mother, the frivolousness of temple monks, an inner dialogue on the mundanity of beauty, and a speech disability, “would the outcome still happen?“

It may seem unlikely, but I propose morality is not easily gained. Much of this book seems to be a mediation on action and the virtues beauty inhabit as opposed the sick manifestation of a boy

Concerning Morality and Beauty

While reading, I was primed with the notion of this ending with the murder of a sex worker. For what it’s worth, I also took most of the book to initially be a boy dealing with a traumatic kind of impotence and depression. The story of Elliot Rodger was at the top of my mind, I was like “for sure this guy murders a prostitute right?“ However Mizoguchi found the company of women often, and when the time for sex occurred he found himself more fixated with the temple, never once seeing women as the source of his impotence. Let us take a look at pages 203 to 205.

“Thus it was that she unfastened her sash-bustle before my eyes and untied the various cords. Then with a silky shriek, the sash itself came undone, and, released from this constriction, the neck of her kimono opened up. I could vaguely make out the woman's white breasts. Putting her hand into her kimono she scooped out her left breast and held it out to me.

It would be untrue to say that I did not feel dizzy. I looked at her breast. I looked at it with minute care. Yet I remained in the role of witness. That mysterious white point which I had seen in the distance from above the temple gate had not been a material globe of flesh like this. The impression had been fermenting so long within me that the breast which I now saw seemed to be nothing but flesh, nothing but a material object. This flesh did not in itself have the power to appeal or to tempt. Exposed there in front of me, and completely cut off from life, it merely served as a proof of the dreariness of existence.

Still, I do not want to say anything untrue, and there is no doubt that at the sight of her white breast I was overcome by dizziness. The trouble was that I looked too carefully and too completely, so that what I saw went beyond the “stage of being a woman's breast and was gradually transformed into a meaningless fragment.

It was then that the wonder occurred. After undergoing this painful process, the woman's breast finally struck me as beautiful. It became endowed with the sterile and frigid characteristics of beauty and, while the breast remained before me, it slowly shut itself up within the principle of its own self. Just as a rose closes itself up within the essential principle of a rose. Beauty arrives late for me. Other people perceive beauty quickly, and discover beauty and sensual desire at the same moment; for me it always comes far later. Now in an instant the woman's breast regained its connection with the whole, it surmounted the state of being mere flesh and became an unfeeling, immortal substance related to eternity.

I hope that I am making myself understood.

The Golden Temple once more appeared before me. Or rather, I should say that the breast was transformed into the Golden Temple.

I was certainly not intoxicated by my understanding. My understanding was trampled underfoot and scorned; naturally enough, life and sensual desire underwent the same process. But my deep feeling of ecstasy stayed with me and for a long time I sat as though paralyzed opposite the woman's naked breast.

I was sitting there when I met the woman's cold, scornful look. She put her breast back into the kimono. I told her that I must leave. She came to the entrance and closed the door after me noisily.

Until I returned to the temple, I remained in the midst of ecstasy. In my mind's eye I could sec the Golden Temple and the woman's breast coming and going one after the other. I was overcome with an impotent sense of joy.

Yet when the outline of the temple began to emerge through the dark pine forest, which was soughing in the wind, my spirits gradually cooled down, my feeling of impotence become predominant and my intoxication changed into hatred-a hatred for I knew not what.

So once again I have been estranged from life!

I do not see this as a metaphor of misplaced rage at the woman. There is morally bound impotence arising from his relationship with the pavilion. Mizoguchi is not driven by despotism or the lack of mental health care. Neither should we consider him to be fanatical in regards to theology. Mizoguchi is driven to act by a moral duty to his ideas. To not acknowledge the moral impetus of his destructive intent requires us to ignore the allegoric dialogue between Kashiwagi & Mizoguchi at the core of this book.

Nansen Kills the Cat

In a Koen compiled by Zen master Wumen Huikai, the tale of Nansen and Joshu is told. Nansen kills a cat because his students couldn’t answer a question on the nature of Zen. Joshu, having been away, arrives, and is told the news of the cat. He places his sandal on his head and walks out. Nansen responds, noting that if Joshu was there, he would have saved the cat. I do not aspire to attain any notion of a Zen practitioner, but the notion of an individual irrationality in action attributed to Zen has intrigued me since hearing this story.

In describing the Zen of Nansen Kills the Cat, Zen master Roshi Philip Kapleau observes

In Zen, it is said that the highest truth is beyond knowing….True Nature is free from all-knowing and not-knowing. It surpasses all concepts of right and wrong, of this and that — “cat has the Buddha nature,” “the cat doesn’t have the Buddha nature”… “dog has the Buddha nature,” “dog doesn’t have the Buddha nature”… “is the enlightened person subject to the law of cause and effect, or is he not?” In every one of these ideas, we are obscuring the wholeness of our True Nature…. Nansen with one stroke cuts out all of these delusions of the monks. Like a surgeon with a scalpel, he cuts out the cancer of this contentious mind.

There is no such thing as individual knowledge….knowledge is the sea of humanity, the field of humanity, the general condition of human existence

What Mizoguchi does as an act of Zen, is possibly motivated by his liaise with beauty. Let us consider some dialogue between Kishiwagi and Mizoguchi.

Kashiwagi: human beings possess the weapon of knowledge in order to make life bearable. For animals such things are not necessary, as animals don’t need knowledge or anything of the sort to make life bearable. But human beings do need something, and with knowledge they can make the very intolerableness of life a weapon. Though know at the same time, that intolerableness is not reduced in the slightest that’s all there is to it

Mizoguchi : don’t you think there is some other way to bear life

Kashiwagi : no I don’t, apart from that, there’s only madness for death

Mizoguchi : knowledge can never transform the world…what transforms the world is action, there’s nothing else

Kashiwagi : there you go…don’t you see that the beauty of this world which means so much to you craves sleep, and that in order to sleep it must be protected by knowledge. you remember that story of Nansen kills a kitten…the cat in that story was incomparably beautiful. the reason that the priest from the two halls of the temple quarreled about the cat, was that they both wanted to protect the kitten. to look after it, to let it sleep snugly within their own particular cloaks of knowledge. Now father Nansen was a man of action so he went and killed a kitten with his sickle, and had done with it, but when Joshu came along later he removed his shoes and put them on his own head. What Joshu wanted to say was this, that he was fully aware that beauty is a thing which must sleep and which, in sleeping must be protected by knowledge, but there is no such thing as individual knowledge. a particular knowledge belonging to one special person or group. Knowledge is the sea of humanity, the field of humanity the general condition of human existence. I think that is what he wanted to say. Now you want to play the world of Joshu don’t you? Well beauty, beauty that you love so much is an illusion of the remaining part, the excessive part which has been consigned to knowledge. It is an illusion of the other way to bear life, which you mentioned. One could say that, in fact, there is no such thing as beauty. what makes the illusion so strong….is precisely knowledge…….beauty is never a consolation. It may be a woman, it may be one’s wife, but its never a consolation. Yet from marriage between this beautiful thing, which is never a consolation on the one hand, and knowledge on the other, something is born. It is as evanescent as a bubble and utterly hopeless, yet something is born that something is what people call art.

Mizoguchi : Beautiful things…those are now my most deadly enemies

Mizoguchi Destroys the Temple

Mizoguchi is exposed to murder, famine, the death of his father, an absentee mother, and an early childhood mired in ridicule and a sense shame. As his mental state deteriorates, it is not any of these realities that spark the idea to burn down the temple. It is hearing of how the citizens in a tavern view the temple monks. Tax burdens who do little more than chase sex workers. At this moment, Mizoguchi has his mind set on destroying asceticism. Mizoguchi loses any illusion of beauty in the practice of the monks and must destroy that beautiful temple.

Works Cited:

Mishina, Y., 1977. The Temple of the golden pavilion. New York: Knopf.

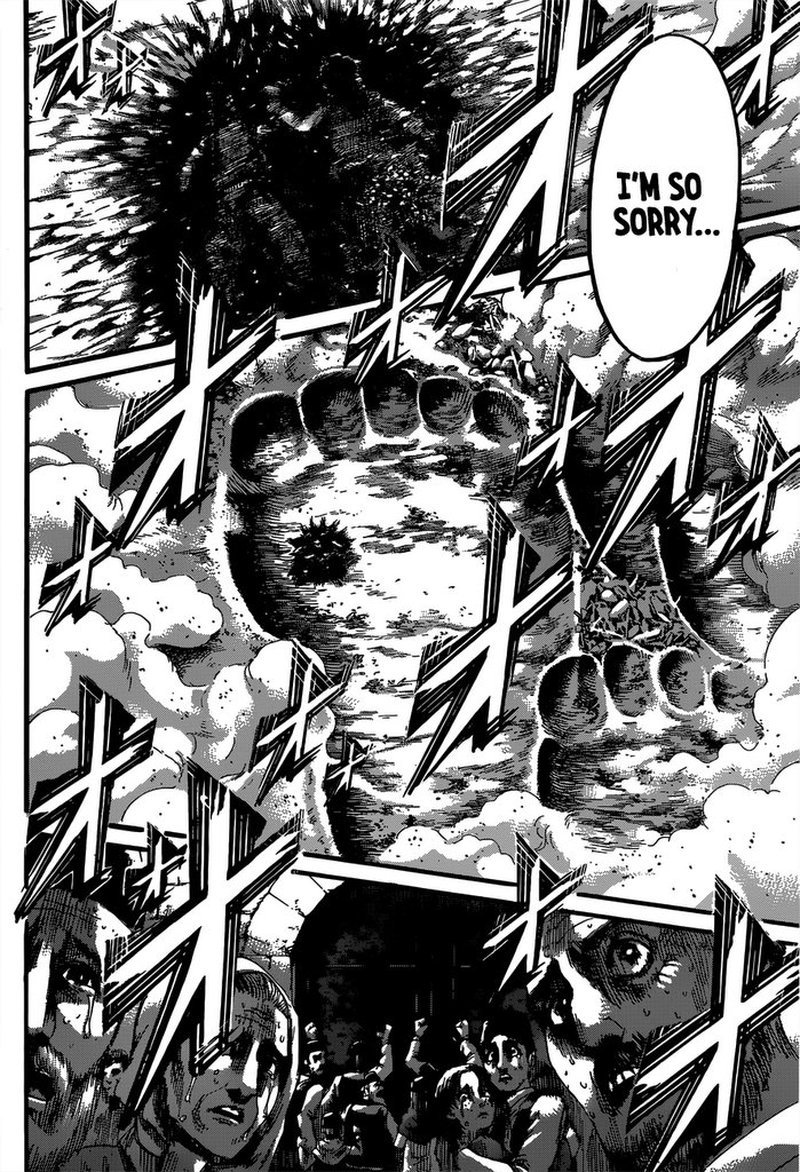

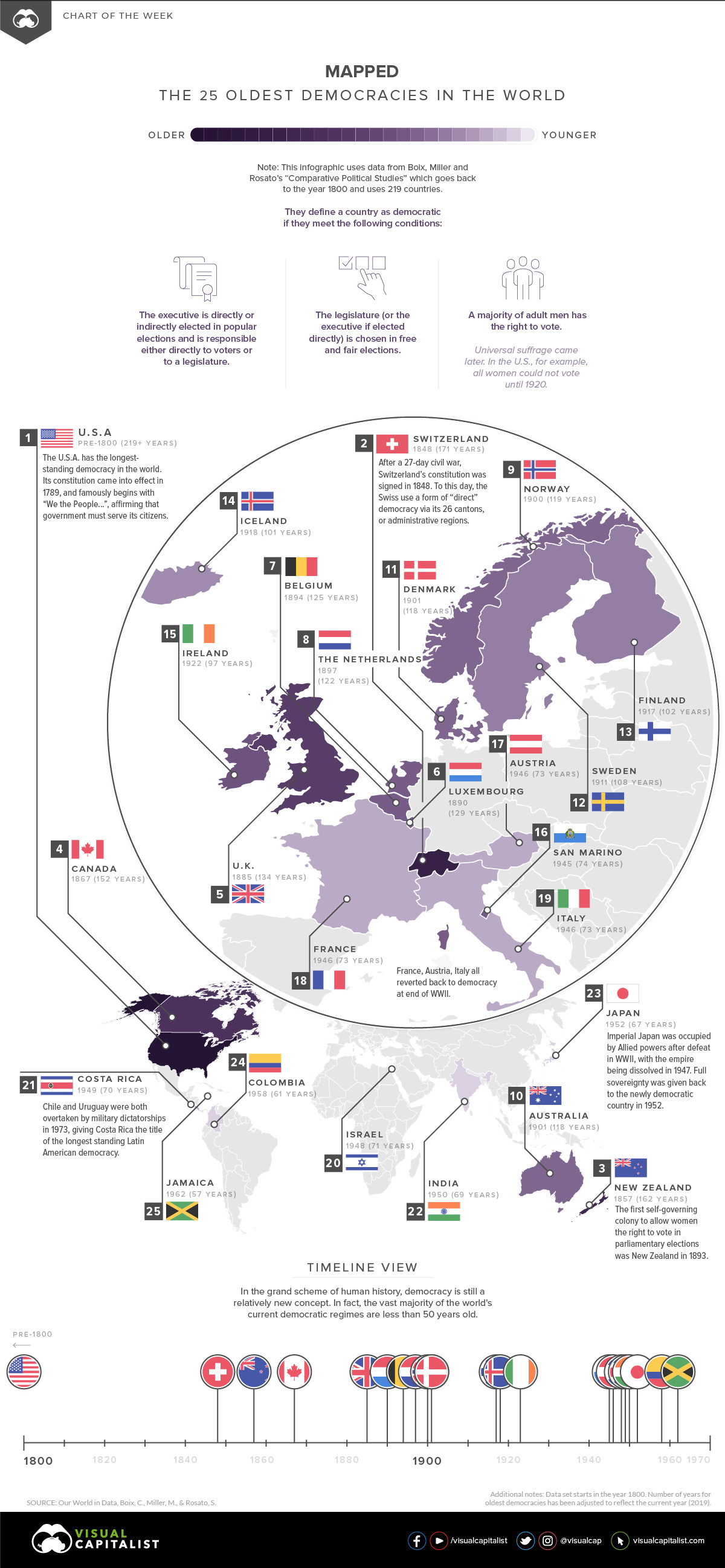

here we find children in a similar predicament aas or protganist doing what they feel they must

here we find children in a similar predicament aas or protganist doing what they feel they must